The

Teradata MultiLoad utility is an efficient way to deal with batch

maintenance of large databases. Teradata MultiLoad is a command-driven

utility for fast, high-volume maintenance on multiple tables and views

in Teradata Database. A single Teradata MultiLoad job performs a number

of different import and delete tasks on database tables and views:

- Each Teradata MultiLoad import task can do multiple data insert, update, and delete functions on up to five different tables or views.

- Each Teradata MultiLoad delete task can remove large numbers of rows from a single table.

- Teradata MultiLoad to import data from:Disk or tape files (using a custom Access Module) on a mainframe-attached client system Input files on a network-attached workstation.

- This feature rich utility can perform multiple types of DML tasks, including INSERT, UPDATE, DELETE and UPSERT on up to five (5) empty or populated target tables at a time.

- MultiLoad is the utility of choice when it comes to loading populated tables in the batch environment. As the volume of data being loaded or updated in a single block, the performance of Multi-Load improves.

Mload Restriction

- Unique Secondary Indexes are not supported on a Target Table. MultiLoad does not support Unique Secondary Indexes (USIs). But unlike FastLoad, it does support the use of Non-Unique Secondary Indexes (NUSIs) because the index subtable row is on the same AMP as the data row. MultiLoad uses every AMP independently and in parallel. If two AMPs must communicate, they are not independent. Therefore, a NUSI (same AMP) is fine, but a USI (different AMP) is not.

- Referential Integrity is not supported:- MultiLoad will not load data into tables that are defined with Referential Integrity(RI).

- Triggers--Triggers cause actions on related tables based upon what happens in a target table. Again, this is a multi-AMP operation and to a different table. To keep MultiLoad running smoothly, disable all Triggers prior to using it.

- No concatenation of input files is allowed:- MultiLoad does not want you to do this because it could impact are restart if the files were concatenated in a different sequence or data was deleted between runs.

- No Join Indexes:- Must drop all Join Indexes before running a MultiLoad and then recreate them after the load is finished.

There are Five Phase of MultiLoad

- Preliminary Phase:- Its Basic setup phase.Its used for several preliminary set-up activities for a successful data load.

- DML Transaction Phase:- All the SQL Data Manipulation Language (DML) statements are sent to Teradata database as Multilaod supports multiple DML functions.

- Acquisition Phase:- Once the setup completes the PE's plan stored on each AMP. Then Locks the table headers and the actual input data will also be stored in the worktable.

- Application Phase:- In this phase all DML operations are applied on target tables.

- Cleanup Phase:- Table locks will be released and all the intermediate work tables will be dropped.

LOGTABLE EMPDB.EMP_TABLE_LOG;

.LOGON TDDB/USERNAME,PWD;

TABLES EMPDB.EMP_TABLE

WORK TABLES EMPDB.EMP_WT

ERROR TABLE EMPDB.EMP_ET EMPDB.EMP_UV;

.FIELD SALARY * DECIMAL(8,2);

UPDATE EMPDB.EMP_TABLE

SET SALARY=:SALARY

WHERE EMP_NUM=:EMP_NUM;

LAYOUT FILECOLDESC

APPLY EMP_UPD;

.LOGOFF;

Mload Script Skeltom

- Setting up a Logtable

- Logging onto Teradata.

- Identifying the Target, Work and Error tables

- Defining the structure INPUT file

- Defining the DML activities to occur

- Naming the IMPORT file and its format

- Telling MultiLoad to use a particular LAYOUT

- Telling the system to start DML activity

- Finishing the loding and log off terdata.

Log Table: Log table stores the processing record information during load.This table contains one row for every Multiload running on the system.

Work Table: MultiLoad will automatically create one worktable for each target table. You need to specify the name of work table in the scripts itself.

The Purpose of work tables are

- To perform DM tasks

- APPLYing the input data to the AMPs.

- The first Error Table (ET). It contains all translation and constraint errors that may occur while the data is being acquired from the source(s)

- The second Uniqueness Violation (UV) table that stores rows with duplicate values for Unique Primary Indexes (UPI).

Referential Integrity and MultiLoad Problems

In

Teradata when using MultiLoad the Referential Integrity (RI) can trip

you up. There is a problem when using FastLoad or MultiLoad and if you

expect the utility to take care of any Referential Integrity problems.

Teradata always provides a Referential Integrity Error Table unless you

are using FastLoad. You must load the table first and then create the

Foreign Key references.

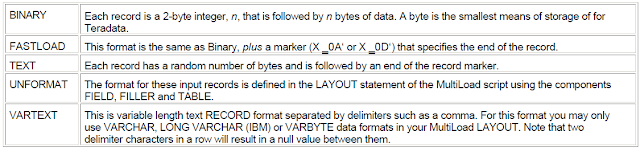

Supported Input Formats

Data

input files come in a variety of formats but MultiLoad is flexible

enough to handle many of them. MultiLoad supports the following five

format options: BINARY, FASTLOAD, TEXT, UNFORMAT and VARTEXT.

A MultiLoad That INSERTS and UPDATES using Two Different Input Files

This

script example is designed to show MultiLoad IMPORT in a more complex

form. It uses two different flat files for sources and then INSERTs

records from the first flat file into the Employee_Table. Then it uses

the second flat file and UPDATE’s the Department_Table. The actual

script is in the left column and our comments are on the right.

Step One: Setting up a Logtable and Logging onto Teradata--MultiLoad

requires you specify a log table right at the outset with the .LOGTABLE

command. Immediately after this you log onto Teradata using the .LOGON

command. Instead of using the Logon command we have used a .RUN File

which contains our logon information, but now it is hidden from anyone

seeing it.

Step Two: Identifying the Target, Work and Error tables--In this step of the script you must tell Teradata which TABLES, WORKTABLES AND ERROR TABLES to use.

Step Three: Identifying the Target, Work and Error tables--MultiLoad is going to need to know the structure the INPUT flat files.

Step Four: Defining the DML activities to occur--The .DML LABEL names and defines the SQL that is to execute. In this example we are going to UPDATE.

Step Five: Naming the INPUT file and its format type--This

step is vital! Using the .IMPORT command, we have identified the INFILE

data as being contained in a file called "mload_flat_file.txt". Next,

we referenced the LAYOUT named FileColDesc1 to describe the fields in

the record. Finally, we told MultiLoad to APPLY the DML LABEL called

EMP_UPD.

A MultiLoad That INSERTS and UPDATES using Two Different Input Files Continued

This is a continuation of the explanation of our script.

Step Four: Defining the DML activities to occur--The

DML LABEL names and defines the SQL that is to execute. In this example

we are going to INSERT into the Employee_Table and then the next DML

Label will UPDATE the Department_Table.

Step Five: Naming the INPUT file and its format type --. This

step is vital! Using the .IMPORT command, we have identified the INFILE

data as being contained in a file called "EMP_DATA.txt" and

"DEPT_DATA.txt". Next, we reference the LAYOUTs to describe the fields

in the flat files. Finally, we told MultiLoad to APPLY both DML LABELs.

A MultiLoad That UPSERTS

This

script example is designed to show a MultiLoad UPSERT. An UPSERT will

first attempt to update a table, but if the row doesn’t exist to be

updated, then Teradata will perform an INSERT. If the UPDATE takes place

then the INSERT is skipped. The INSERT is only attempted if the UPDATE

fails.

Step One: Setting up a Logtable and Logging onto Teradata —

MultiLoad requires you specify a log table right at the outset with the

.LOGTABLE command. Immediately after this you log onto Teradata using

the .LOGON command.

Step Two: Identifying the Target, Work and Error tables —

In this step of the script you must tell Teradata which TABLES,

WORKTABLES AND ERROR TABLES to use. All you must do is name the tables

and specify what database they are in. Work tables and error tables are

created automatically for you.

Step Three: Defining the INPUT flat file record structure — MultiLoad is going to need to know the structure the INPUT flat files.

Step Four: Defining the DML activities to occur —

The DML LABEL is given a name and we called this one UPSERTER. In this

example we are going to UPDATE the Student_Profile table, but if the row

doesn‘t exist we will then INSERT it into the Student_Profile Table.

Step Five:Naming the INPUT file and its format type--This

step is vital! Using the .IMPORT command, we have identified the INFILE

data as being contained in a file called "UPSERT.DAT". Next, we

reference the LAYOUT to describe the fields in the flat file. Finally,

we told MultiLoad to APPLY the DML LABEL UPSERTER. Warning Do you see

the DO INSERT FOR MISSING UPDATE ROWS statement bolded in yellow? That

needs to be in the script or it will not work properly.

A MultiLoad That DELETES

Two MultiLoad Modes: IMPORT and DELETE

MultiLoad

provides two types of operations via modes: IMPORT and DELETE. In

MultiLoad IMPORT mode, you have the freedom to "mix and match" up to

twenty (20) INSERTs, UPDATEs or DELETEs on up to five target tables. The

execution of the DML statements is not mandatory for all rows in a

table. Instead, their execution hinges upon the conditions contained in

the APPLY clause of the script. Once again, MultiLoad demonstrates its

user-friendly flexibility. For UPDATEs or DELETEs to be successful in

IMPORT mode, they must reference the Primary Index in the WHERE clause.

The

MultiLoad DELETE mode is used to perform a global (all AMP) delete on

just one table. The reason to use .BEGIN DELETE MLOAD is that it

bypasses the Transient Journal (TJ) and can be RESTARTED if an error

causes it to terminate prior to finishing. When performing in DELETE

mode, the DELETE SQL statement cannot reference the Primary Index in the

WHERE clause. This due to the fact that a primary index access is to a

specific AMP; this is a global operation.

The

other factor that makes a DELETE mode operation so good is that it

examines an entire block of rows at a time. Once all the eligible rows

have been removed, the block is written one time and a checkpoint is

written. So, if a restart is necessary, it simply starts deleting rows

from the next block without a checkpoint. This is a smart way to

continue.Remember, when using the TJ all deleted rows are put back into

the table from the TJ as a rollback. A rollback can take longer to

finish then the delete. MultiLoad does not do a rollback; it does a

restart.

Notes..

Once the multiload get failed before starting the load again following things need to be consider.

1. Release mload from the table (use below query)

Release mload table name

2. Now Drop all the four table say ET_table_name, WT_table_name, LT_table_name, UT_table_name

If our job fails at our application phase, we have to release the lock on the mloaded table.

RELEASE MLOAD databasename.tablename IN APPLY

Once the above process completed then start from the beginning.

Error code 2571

Mload

can't be released. This error occurs when table does not exist or user

does not have release mload access or table is not in application phase

(using IN APPLY) or Mload is still active on target table.

Error Code 2652

When

user try to access the table that is being mloaded. In this scenario

use locking with access modifier. (Operation not allowed - Table is

being mloaded)

Formats of input flat file

- Binary

- Fastload

- Text

- Unformat

- Vartext

IMPORTANT FACTS

Mload

delete is faster than nprmal delete statement because in mload deletion

happens with 64kbytes data blocks where as normal delete statement

deletes the data row by row. Transient journal maintain for the normal

delete command , Teradata utilities does not support Transient Journal

loading.

How Many error tables in Mload and what are there uses??

For

Multiload Utility, 2 Error tables are required for each target table,

if you dont explicitly mention the names Mload will automatically create

the same with ET_ and UV_. The job of this error tables are similar to

that of FLOAD i.e. ET_ Error table is for data issue and UV_ error table

is for UPI violation.

Merge Insert in Mload

.Logtable Logtable003;

.Logon tpx/uname,pass;

.Begin Import Mload

tables tb_c3; /*target table*/

.Layout Layoutname;

.Field Country varchar(10); /*src column name*/

.Field State varchar(10); /*src column name*/

.Field Revenue number; /*src column name*/

.DML Label DMLlabelname

Do insert for missing update rows;

update tb_c3

set Country=:Country,

State=:State,

Revenue=:Revenue

where State=:State and Country=:Country

Insert into tb_c3(Country,Revenue) values (:Country,:Revenue);

.End Mload;

/* what should I write here as you mentioned after End Mload*/

.Logoff;

RELEASE MLOAD; --for acquisiton

RELEASE MLOAD IN APPLY; --For application

Error Codes 2571

Mload

can't be released. This error occurs when table does not exist or user

does not have release mload access or table is not in application phase

(using IN APPLY) or Mload is still active on target table.

Error Codes 2652

When

user try to access the table that is being mloaded. In this scenario

use locking with access modifier. (Operation not allowed - Table is

being mloaded)

Now

there can be up to 30 concurrent FastLoad and MultiLoad jobs, but

remember it is up to each individual company to determine if this is too

many because of the performance hit.

For

FastExport jobs up to 60 can run concurrently. The only caveat here is

that 60 FastExports can run simultaneously (minus the number of active

FastLoad and MultiLoad jobs also running).This new feature is actually

controlled by a new DBS Control parameter named MaxLoadAWT, which

controls AMP Worker Tasks (AWT). When MaxLoadAWT is set to zero then it

is like going back in time to pre-V2R6.0 where only 15 FastLoad,

MultiLoad and FastExports can run max.

When

MaxLoadAWT is greater than zero the new feature is active. Each AMP can

perform 80 things at once, thus meaning that 80 AMP Worker Tasks per

AMP. The MaxLoadAWT should never exceed more than 48 or the AMPs would

not be able to do much else during the load.

No comments:

Post a Comment